![Dreamlinux, OpenGL test]()

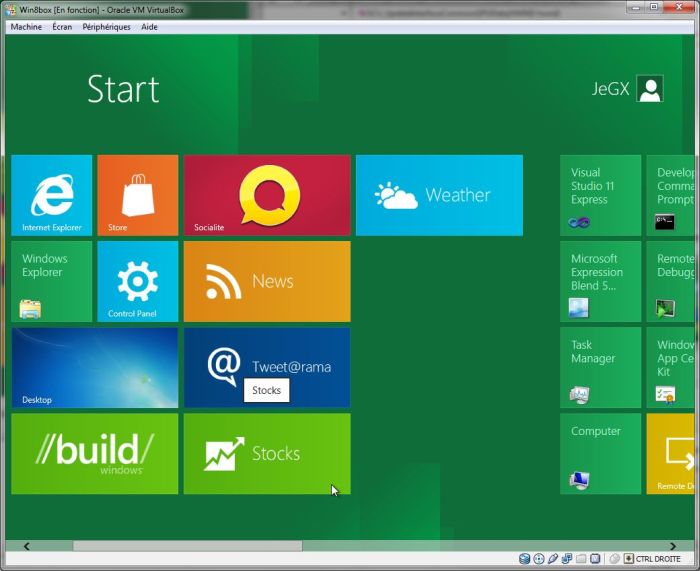

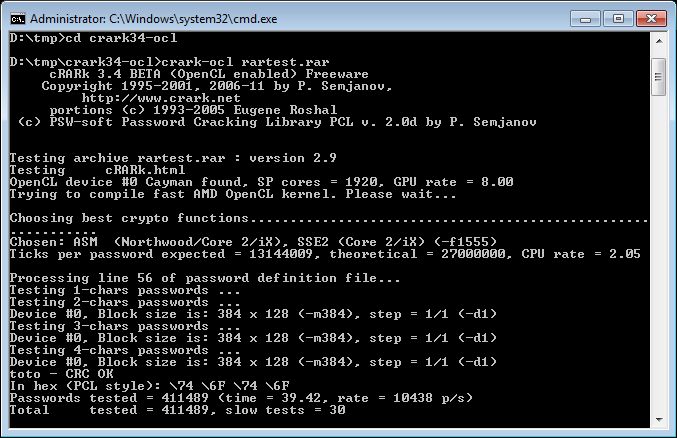

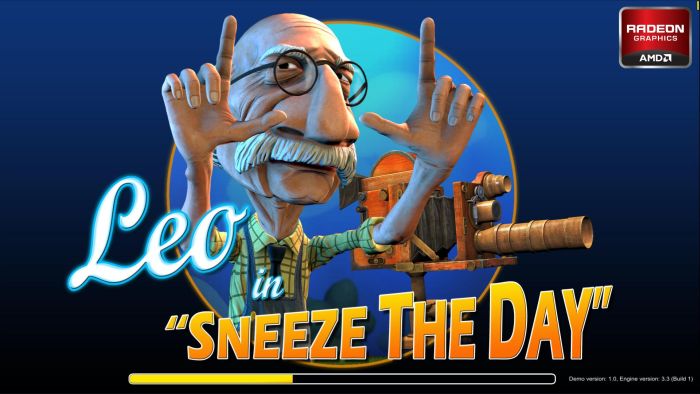

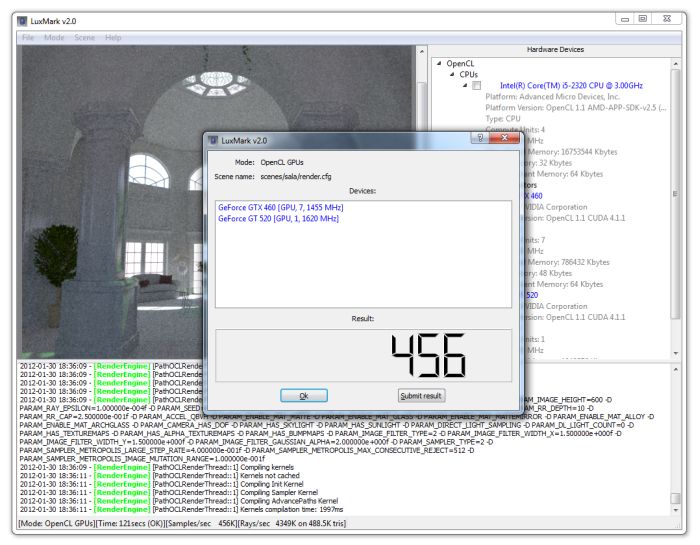

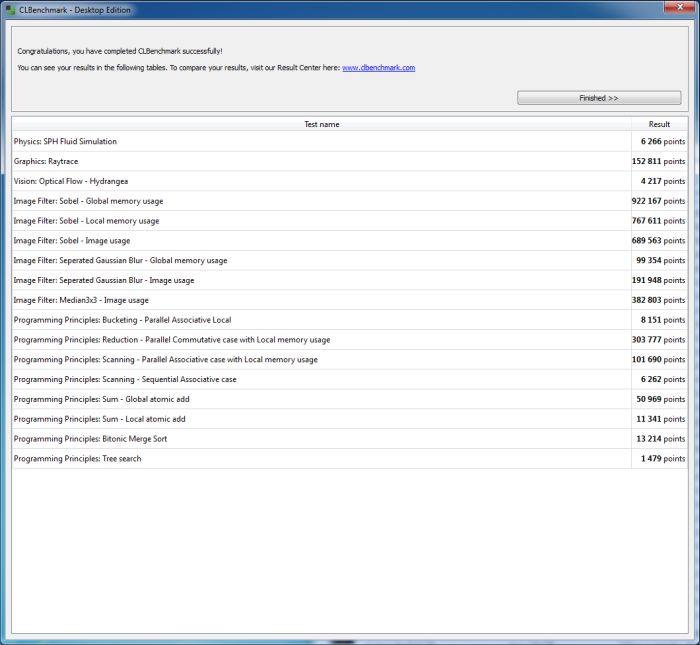

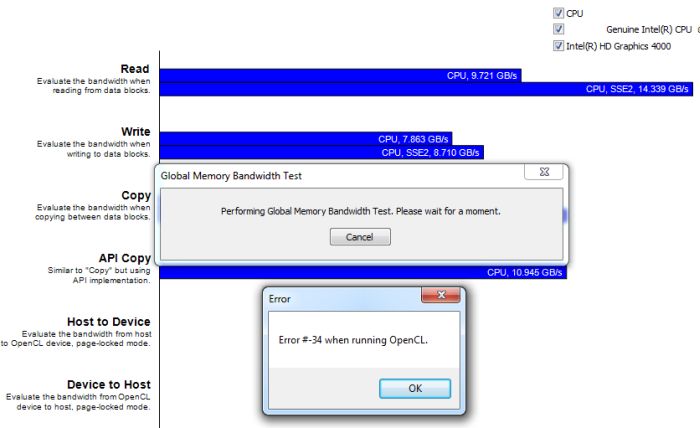

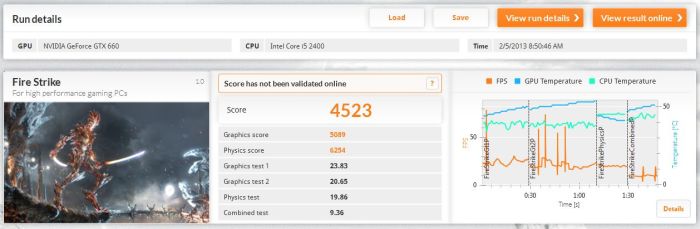

In my recent programming sessions under

Linux, I used NVIDIA proprietary and closed source drivers (64-bit version, it was under Mint10 64-bit) without too much difference with Windows ones. Few days ago, I decided to test

Dreamlinux 5.0, a 32-bit Linux distro based on

Debian 7 Wheezy. Dreamlinux comes with

Nouveau driver (NouVeau?), the open source driver for NVIDIA cards. That was a nice opportunity to play for the first time with this famous driver and above all to clarify some words like Gallium3D, llvmpipe, Mesa3D or REnouveau…

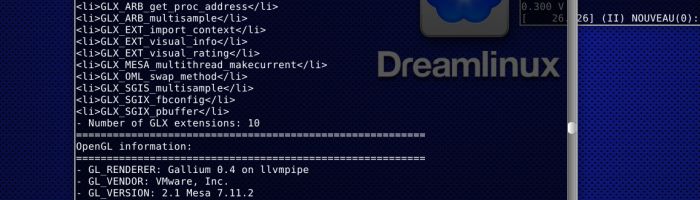

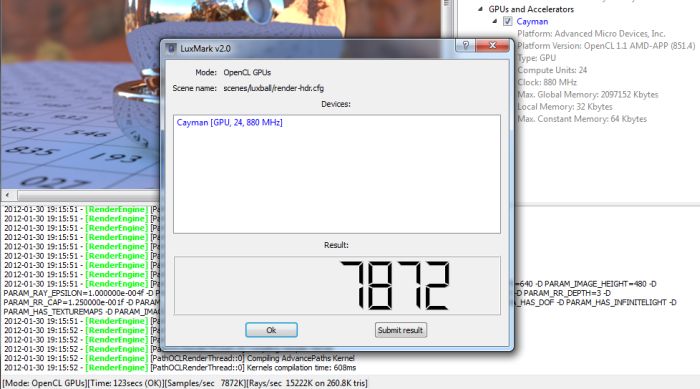

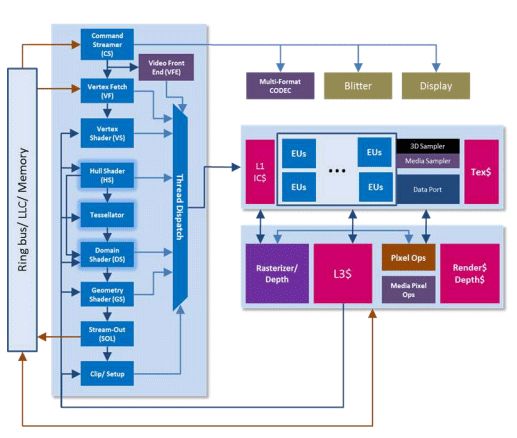

On the top, there is Mesa3D:

Mesa is an open-source implementation of the OpenGL specification – a system for rendering interactive 3D graphics.

A variety of device drivers allows Mesa to be used in many different environments ranging from software emulation to complete hardware acceleration for modern GPUs.

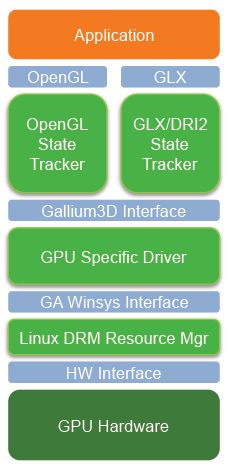

To talk with our GPUs, Mesa uses an interface called Gallium3D (which has remplaced the old DRI –Direct Rendering Infrastructure– driver interface, also called Mesa Classic Driver Model). Gallium3D is a redesign of Mesa’s device driver model:

Gallium3D is a new architecture for building 3D graphics drivers. Initially supporting Mesa and Linux graphics drivers, Gallium3D is designed to allow portability to all major operating systems and graphics interfaces.

The Nouveau driver is a specific implementation (GPU Specific Driver) of the Gallium3D interface:

![Gallium3D Architecture]()

Nouveau driver is not available for all GeForce, especially the latest ones (GTX 500 series). Nouveau’s developers team must reverse NVIDIA proprietary drivers (by analyzing memory changes, see REnouveau project –Reverse Engineering for nouveau) and this is a really tough work!

When an accelerated renderer is not available like for the GeForce GTX 580, a software renderer (or rasterizer) is used. Softpipe is the reference driver for Gallium3D but it’s slow. LLVMpipe is a new and faster software renderer for Gallium3D (only for x86 processors):

The Gallium llvmpipe driver is a software rasterizer that uses LLVM to do runtime code generation. Shaders, point/line/triangle rasterization and vertex processing are implemented with LLVM IR which is translated to x86 or x86-64 machine code. Also, the driver is multithreaded to take advantage of multiple CPU cores (up to 8 at this time). It’s the fastest software rasterizer for Mesa.

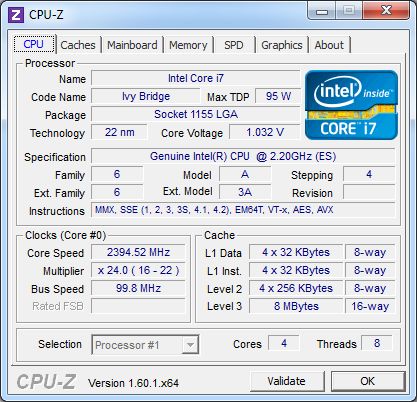

Both Nouveau and LLVMpipe renderers expose the OpenGL 2.1 API, imposed by the current version of Mesa3D (v7.11). Mesa3D v8.0 will expose OpenGL 3.0, at least for Intel Sandy and Ivy Bridge GPUs.

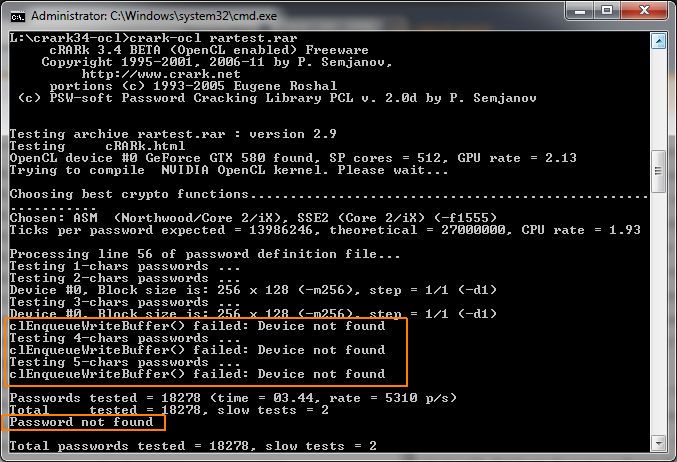

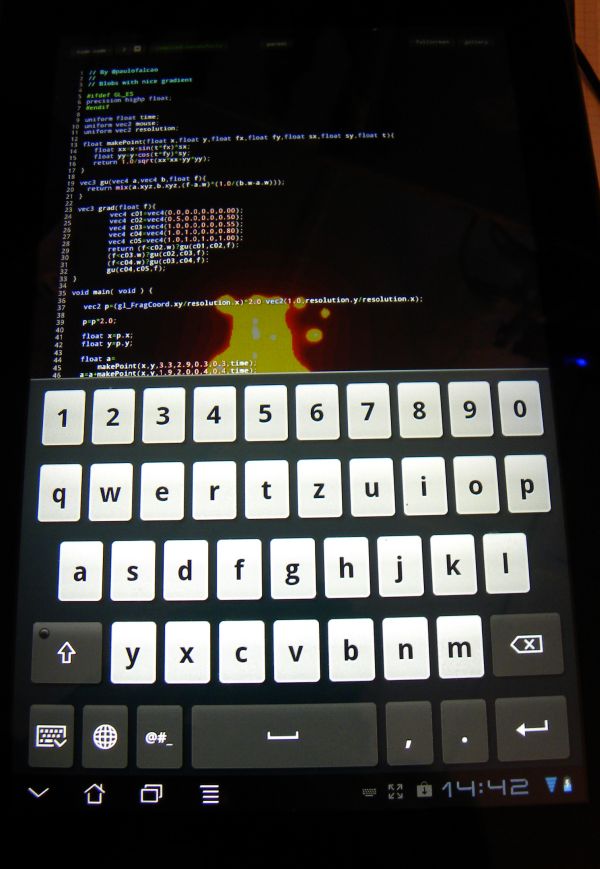

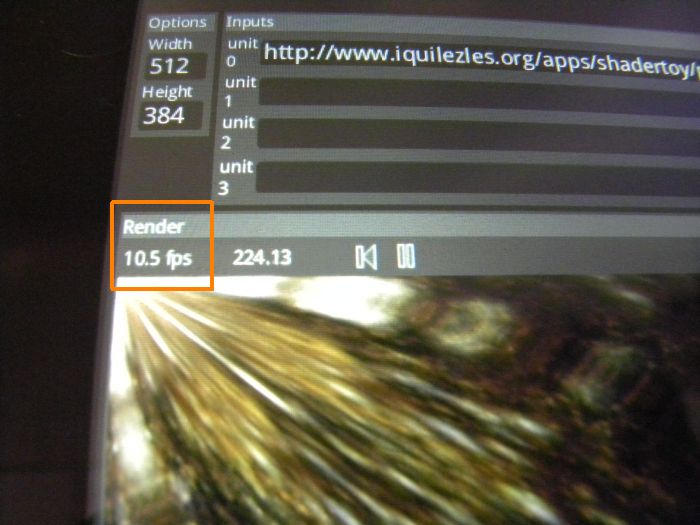

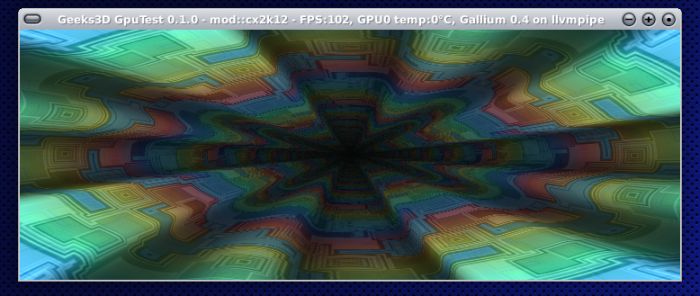

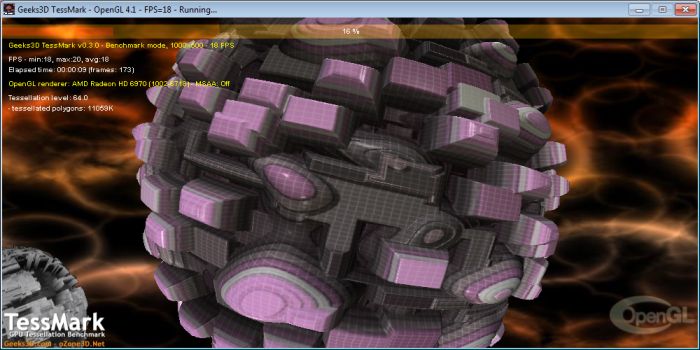

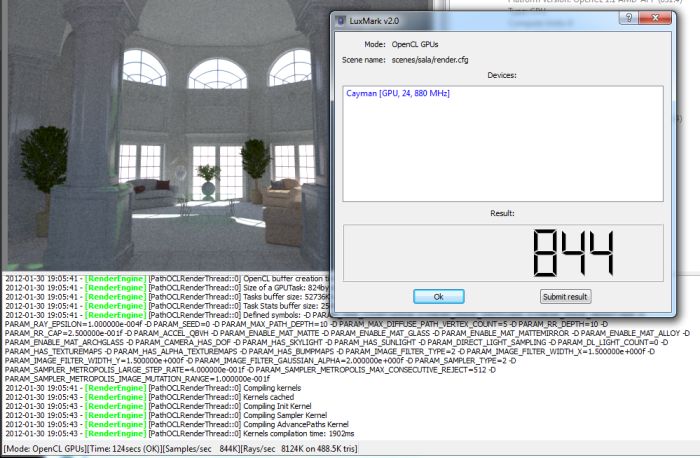

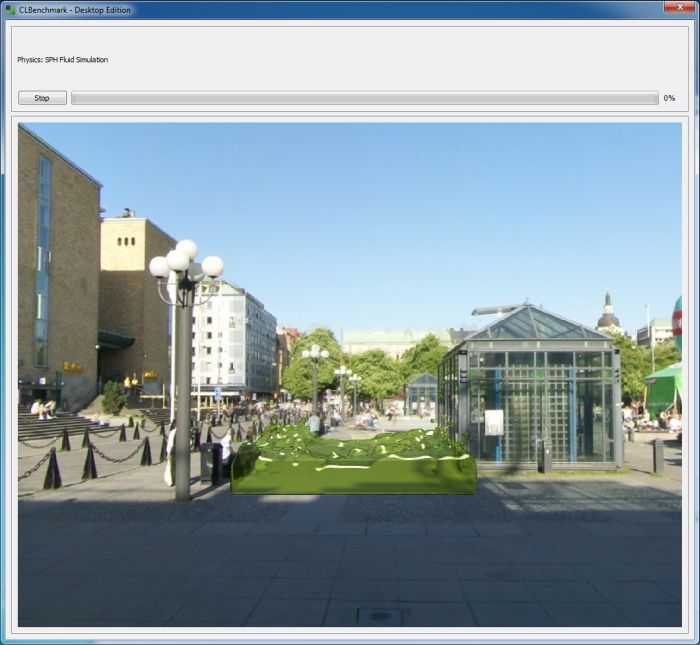

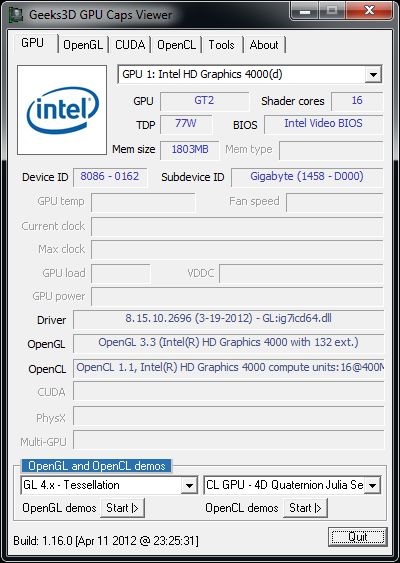

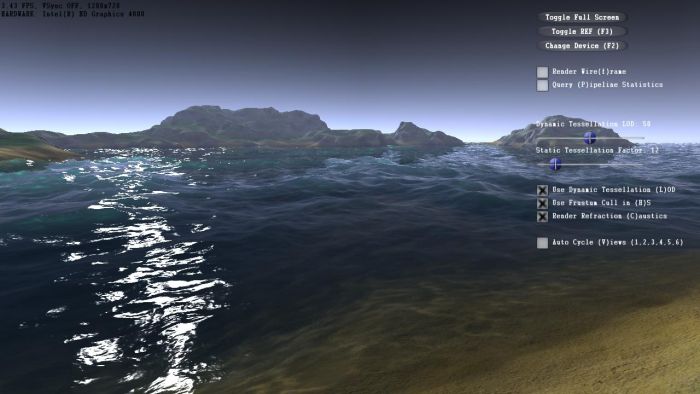

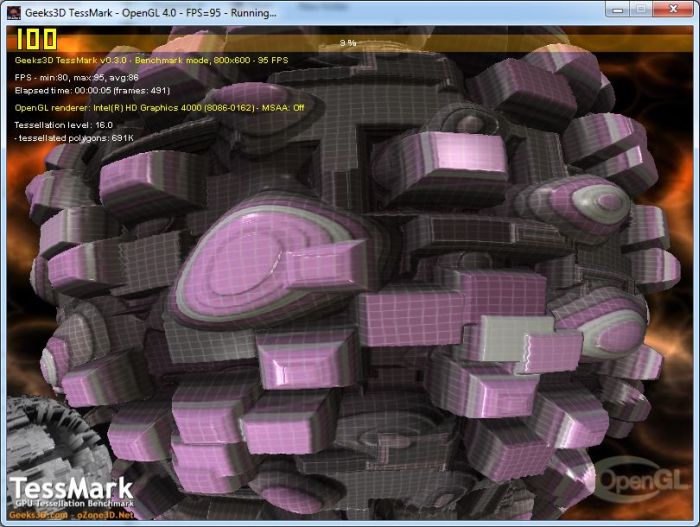

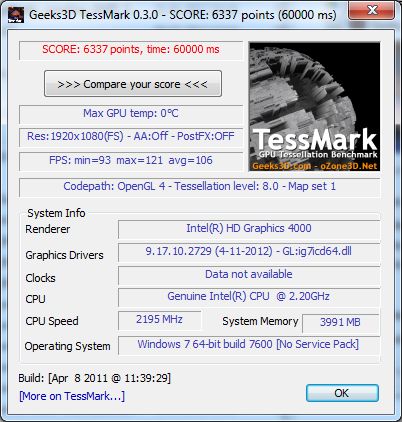

The following screenshot shows an OpenGL 2 test with the LLVMpipe renderer (LLVMpipe because I plugged a GTX 580 which is not yet supported by Nouveau):

![OpenGL test, Gallium3D llvmpipe software renderer]()

The demo runs at around 100 FPS, not bad for a software renderer.

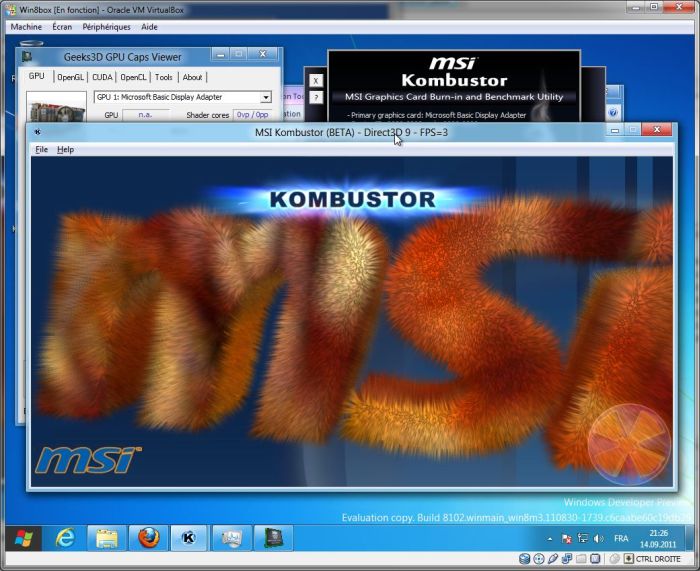

For older graphics cards, Nouveau comes with hardware accelerated renderers. For example, the GeForce GTX 280 is drived by the NVA0 renderer. This page lists all NVIDIA codenames used in Nouveau 3D driver.

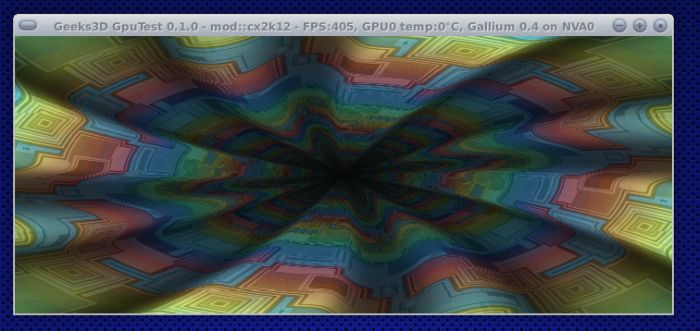

I plugged a GTX 280 in place of the GTX 580 and relaunched my OpenGL test:

![Nouveau driver, OpenGL test, NVA0 renderer]()

400 FPS for the Nouveau NVA0 renderer, nice!

According to this page about the freshly released Linux 3.2 kernel, the Nouveau driver will be available shortly with hardware support of GTX 500 series via the NVC8 renderer:

The Nouveau driver now uses the acceleration functions that are available with the auto-generated firmware on the Fermi graphic cores NVC1 (GeForce GT 415M, 420, 420M, 425M, 430, 435M, 525M, 530, 540M, 550M and 555, as well as Quadro 600 and 1000M), NVC8 (GeForce GTX 560 Ti OEM, 570, 580 and 590 as well as Quadro 3000M, 4000M and 5010M) and NVCF (GeForce GTX 550 Ti and 560M) chips; Linux 3.2 will be the first kernel version to support the latter graphics chips. Several other kernel modifications provide the foundations for power saving features in the Nouveau driver which future kernel versions are expected to use.

Can’t wait to test it!

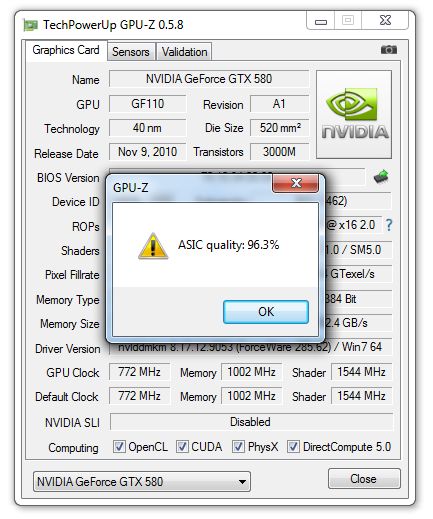

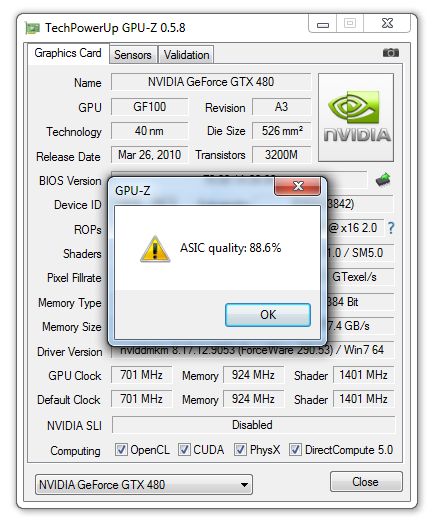

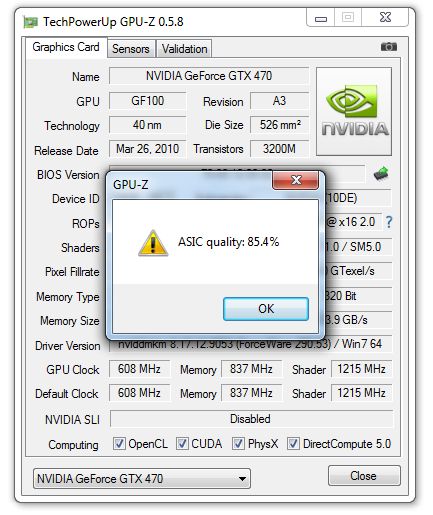

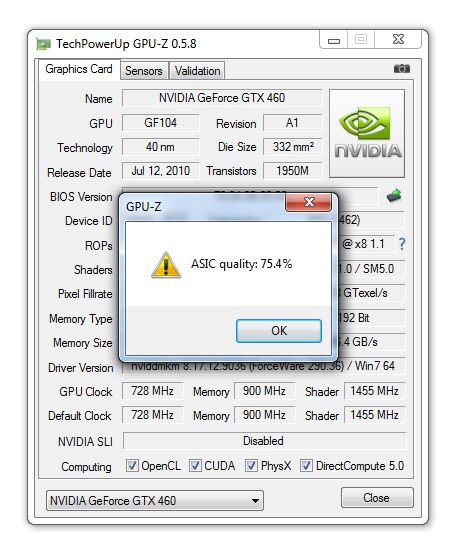

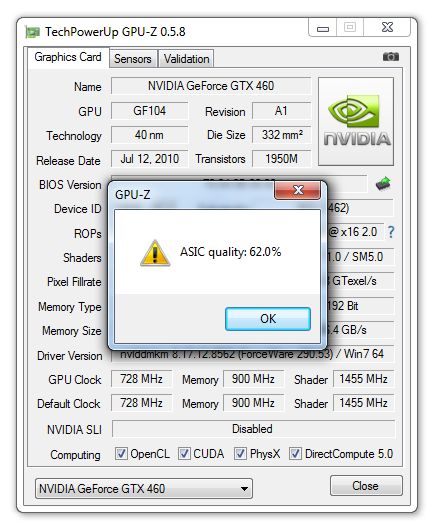

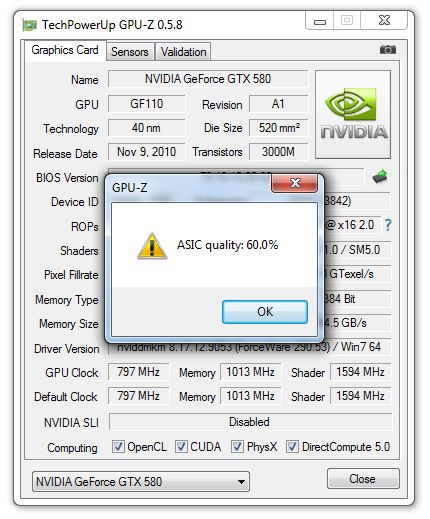

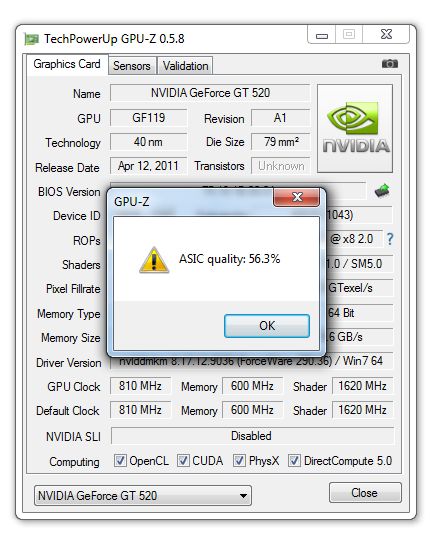

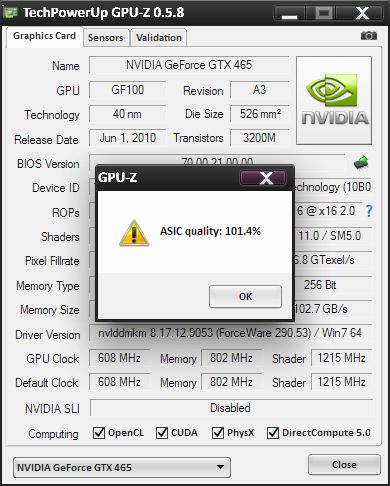

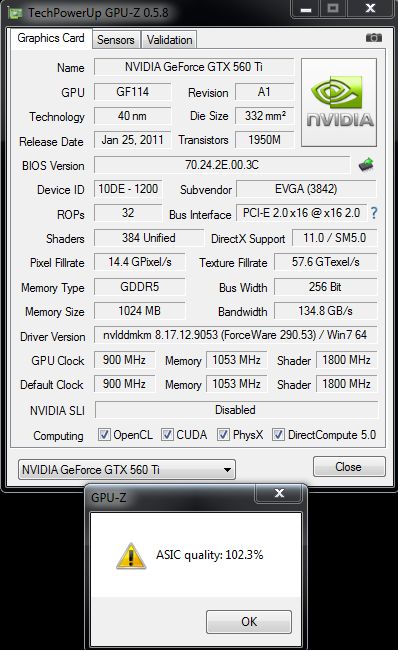

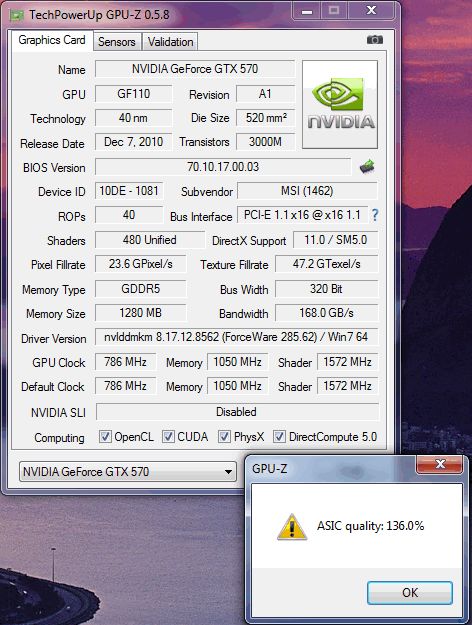

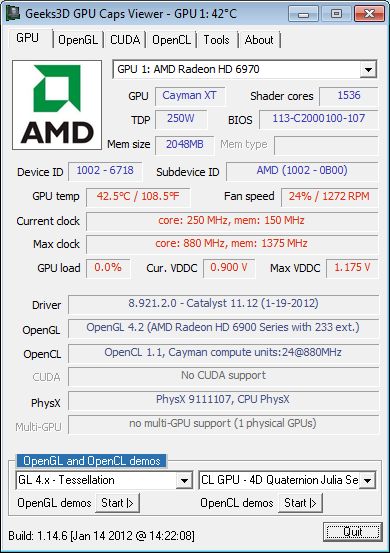

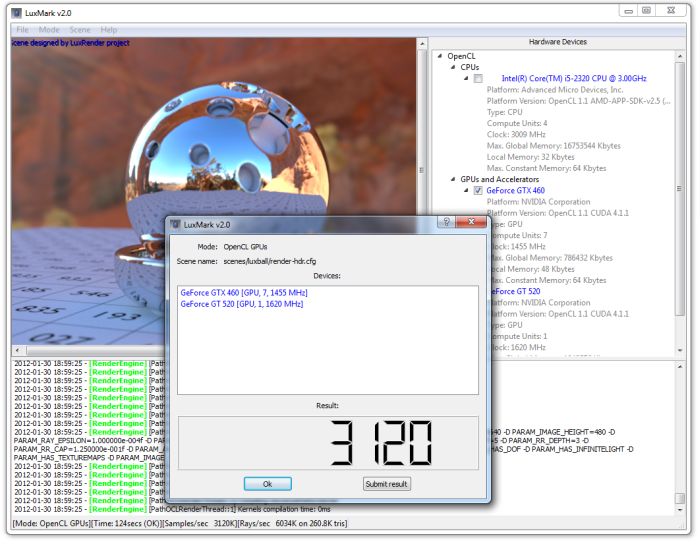

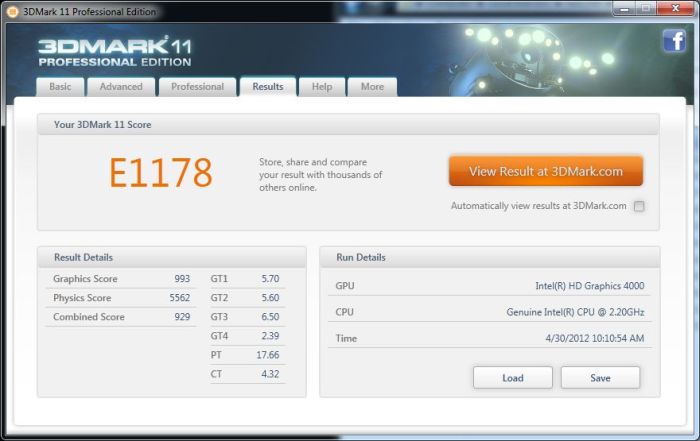

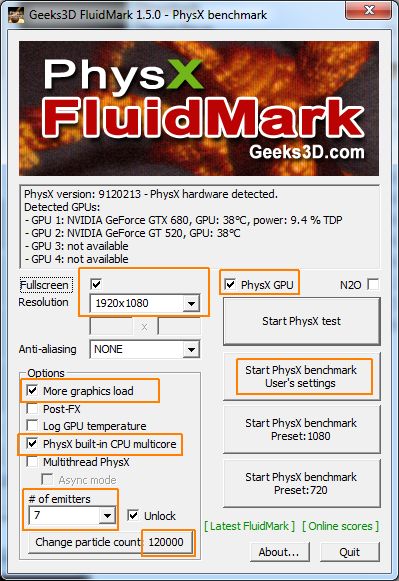

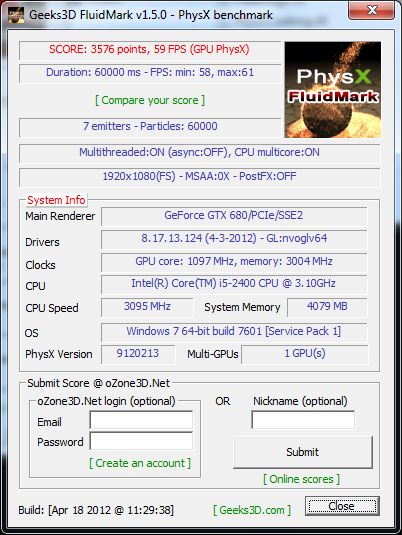

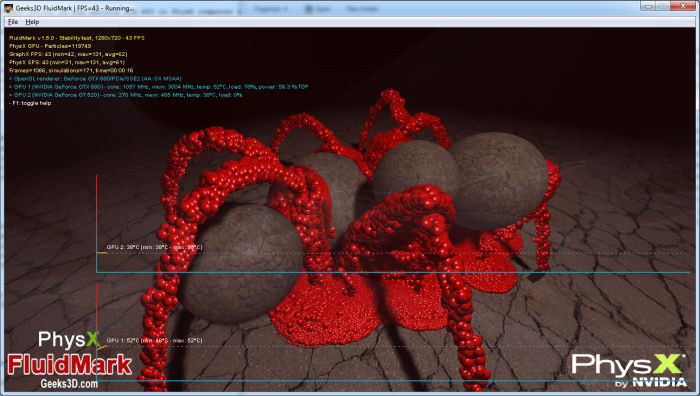

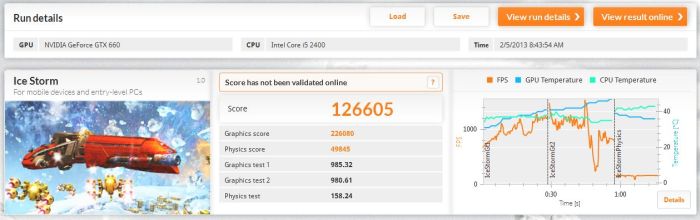

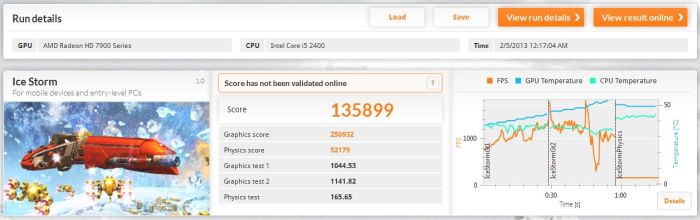

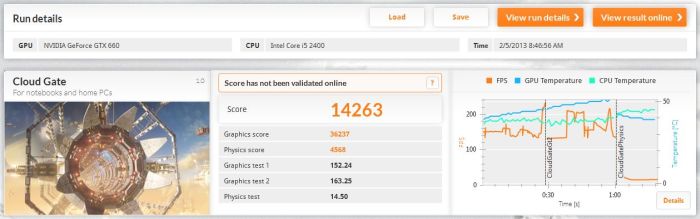

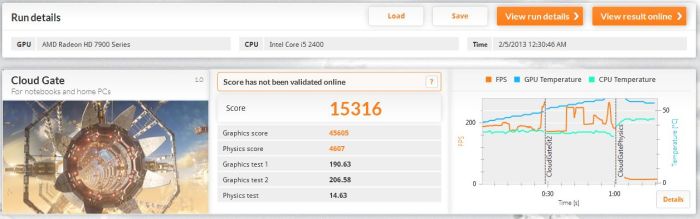

Now the question: Nouveau driver is it fast? Mainly compared to NVIDIA proprietary driver?

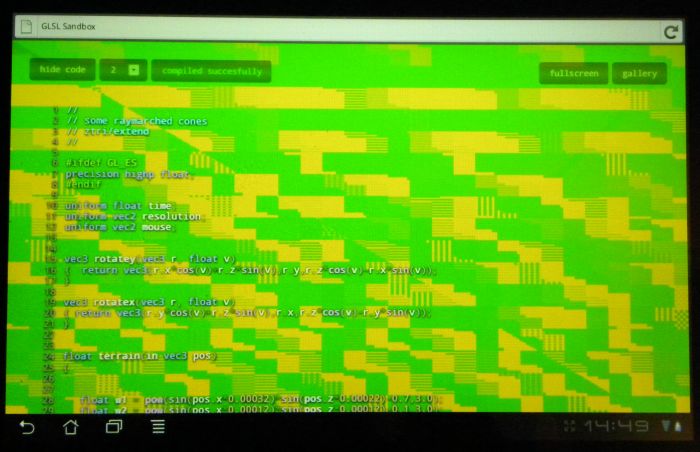

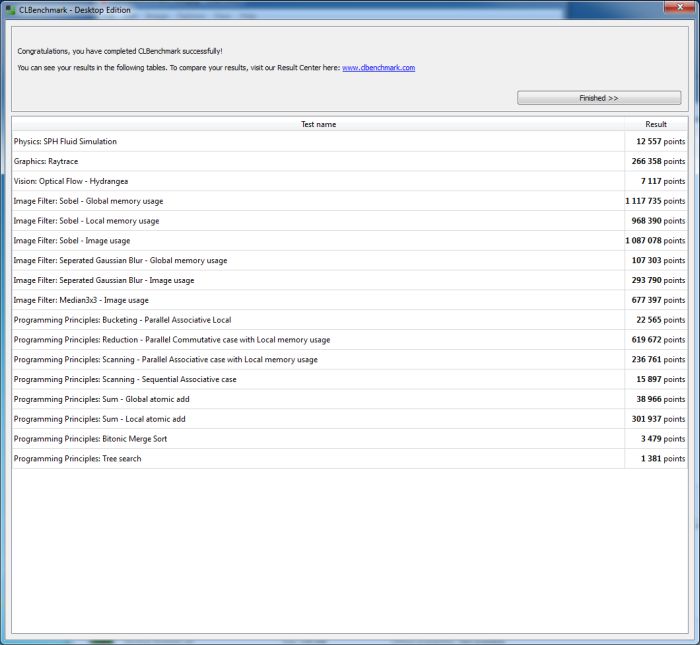

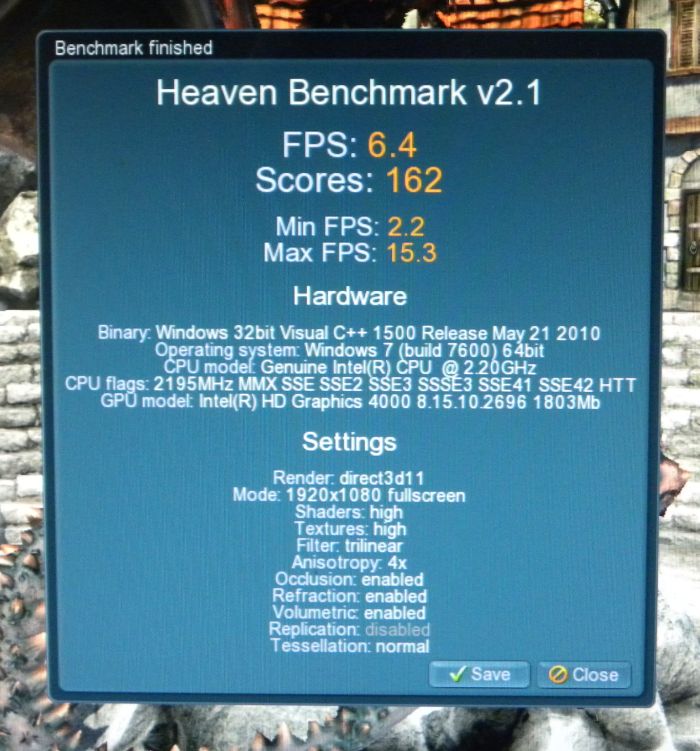

To bring the answer, I tested my OpenGL demo under BackBox Linux 2.01. I love all these Linux distros ![;)]()

BackBox is based on Ubuntu and comes with NVIDIA R270.41 (or I got R270.41 via the update, I can’t remember). I launched my test app:

![Backbox Linux, OpenGL test, R270.41 driver, GeForce GTX 280]()

Ouch! More than 5000 FPS (still with the GTX 280).

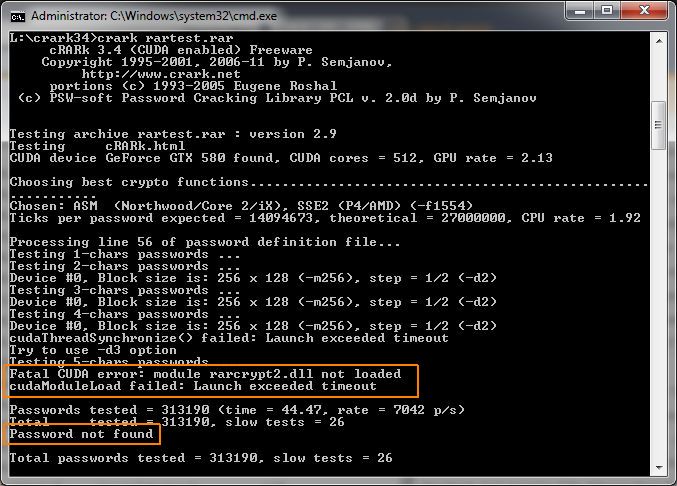

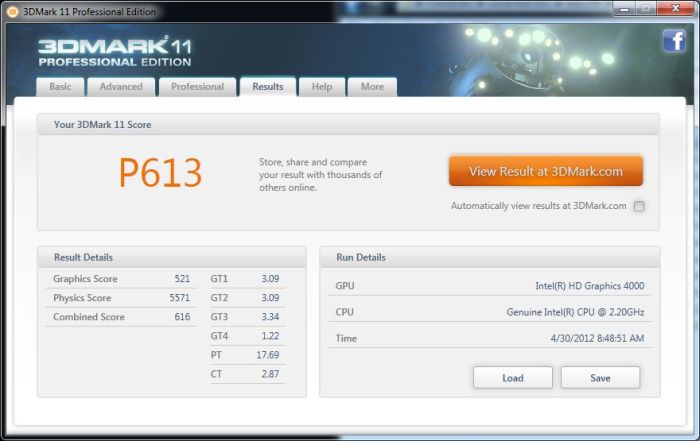

And with a GeForce GTX 480?

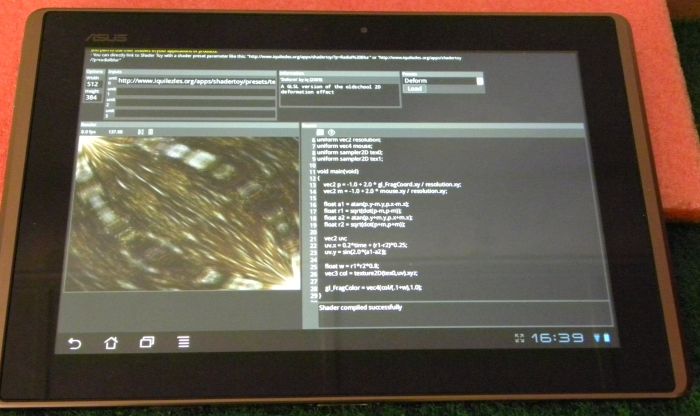

This time, it’s the Nouveau NVC0 renderer that is used to drive the GTX 480 under Dreamlinux 5.0:

![Dreamlinux, OpenGL test, GeForce GTX 480, Nouveau NVC0 renderer]()

Around 600 FPS.

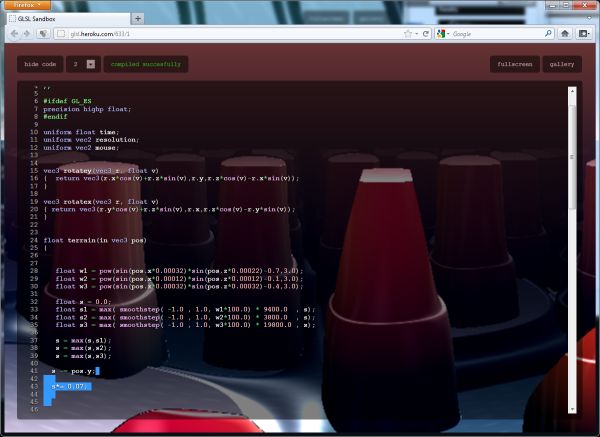

Now, the GTX 480 with NVIDIA proprietary driver under Backbox 2.01:

![Backbox Linux, OpenGL test, R270.41 driver, GeForce GTX 480]()

Around 10000 FPS ![:D]()

Phoronix has a performance test (Nouveau’s OpenGL Performance Approaches The NVIDIA Driver) with entry level cards cards. For GPUs with few shader cores, the Nouveau driver approaches the performances of NVIDIA one but for GPUs with more shader cores, the gap is larger. And for recent GPUs, the gap is… abyssal!

The road to reach NVIDIA’s drivers performance for recent GeForce cards is long, very long…

If you’re not a Linux noob like me (actually even if you’re a linux noob!), don’t hesitate to bring additional information or to point out my mistakes. Always Share Your Knowledge!

Some references:

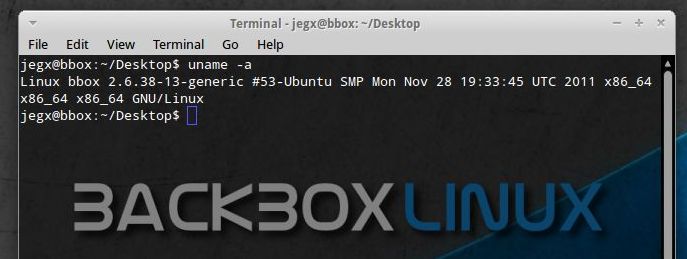

Update (2012.01.11): here are the kernel versions for Dreamlinux and Backbox Linux:

![Dreamlinux, kernel information]()

![Backbox Linux, kernel information]()